Long short-term memory (LSTM; Hochreiter & Schmidhuber, 1997) can solve numerous tasks not solvable by previous learning algorithms for recurrent neural networks (RNNs). We identify a weakness of LSTM networks processing continual input streams that are not a priori segmented into subsequences with explicitly marked ends at which the network's internal state could be reset. Without resets, the state may grow indefinitely and eventually cause the network to break down. Our remedy is a novel, adaptive "forget gate" that enables an LSTM cell to learn to reset itself at appropriate times, thus releasing internal resources. We review illustrative benchmark problems on which standard LSTM outperforms other RNN algorithms. All algorithms (including LSTM) fail to solve continual versions of these problems. LSTM with forget gates, however, easily solves them, and in an elegant way.

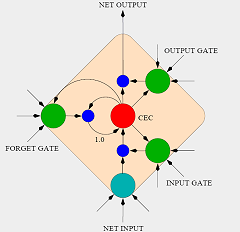

There's plenty of math included, which will be very helpful for our own implementation. The article also describes the topology of the network used in the test setup. Previously read articles were not too clear about exactly which units were connected to the input & output gates.

The seven input units are fully connected to a hidden layer consisting of four memory blocks with 2 cells each (8 cells and 12 gates in total). The cell outputs are fully connected to the cell inputs, all gates, and the seven output units. The output units have additional "shortcut" connections from the input units.

Click here for the full text

- Jasper

No comments:

Post a Comment